The saga of the UK’s contact tracing app has barely begun but already it is fraught with problems. Technical problems – the app barely works on iPhones, for example, and communication between iPhones requires someone with an Android phone to be in close proximity – are just the start of it. Legal problems are another issue – the app looks likely to stretch data protection law at the very least. Then there are practical problems – will the app record you as having contact with people from whom you are blocked by a wall, for example – and the huge issue of getting enough people to download it when many don’t have smartphones, many won’t be savvy enough to get it going, and many more, it seems likely, won’t trust the app enough to use it.

That’s not even to go into the bigger problems with the app. First of all, it seems unlikely to do what people want it to do – though even what is wanted is unclear, a problem which I will get back to. Secondly, it rides roughshod over privacy in not just a legal but a practical way, and despite what many might suggest people do care about privacy enough to make decisions on its basis.

This piece is not about the technical details of the app – there are people far more technologically adept than me who have already written extensively and well about this – and nor is it about the legal details, which have also been covered extensively and well by some real experts (see the Hawktawk blog on data protection, and the opinion of Matthew Ryder QC, Edward Craven, Gayatri Sarathy & Ravi Naik for example) but rather about the underlying problems that have beset this project from the start: misunderstanding privacy, magical thinking, and failure to grasp the nature of trust.

These three issues together mean that right now, the project is likely to fail, do damage, and distract from genuine ways to help deal with the coronavirus crisis, and the best thing people should do is not download or use the app, so that the authorities are forced into a rethink and into a better way forward. It would be far from the first time during this crisis that the government has had to be nudged in a positive direction.

Misunderstanding Privacy – Part 1

Although people often underplay it – particularly in relation to other people – privacy is important to everyone. MPs, for example, will fiercely guard their own privacy whilst passing the most intrusive of surveillance laws. Journalists will fight to protect the privacy of their sources even whilst invading the privacy of the subjects of their investigations. Undercover police officers will resist even legal challenges to reveal their identities after investigations go wrong.

This is for one simple reason: privacy matters to people when things are important.

That is particularly relevant here, because the contact tracing app hits at three of the most important parts of our privacy: our health, our location, and our social interactions. Health and location data, as I detail in my most recent book, what do we know and what should we do about internet privacy, are two of the key areas of the current data world, in part because we care a lot about them and in part because they can be immensely valuable in both positive and negative ways. We care about them because they’re intensely personal and private – but that’s also why they can be valuable to those who wish to exploit or harm us. Health data, for example, can be used to discriminate – something the contact tracing app might well enable, as it could force people to self-isolate whilst others are free to move, or even act as an enabler for the ‘immunity passports’ that have been mooted but are fraught with even more problems than the contact tracing app.

Location data is another matter and something worthy of much more extensive discussion – but suffice it to say that there’s a reason we don’t like the idea of being watched and followed at all times, and that reason is real. If people know where you are or where you have been, they can learn a great deal about you – and know where you are not (if you’re not at home, you might be more vulnerable to burglars) as well as where you might be going. Authoritarian states can find dissidents. Abusive spouses can find their victims and so forth. More ‘benignly’, it can be used to advertise and sell local and relevant products – and in the aggregate can be used to ‘manage’ populations.

Relationship data – who you know, how well you know them, what you do with them and so forth – is in online terms one of the things that makes Facebook so successful and at the same time so intrusive. What a contact tracing system can do is translate that into the offline world. Indeed, that’s the essence of it: to gather data about who you come into contact with, or at least in proximity to, by getting your phone to communicate with all the phones close to you in the real world.

This is something we do and should care about, and could and should be protective over. Whilst it makes sense in relation to protecting against the spread of an infection, the potential for misuse of this kind of data is perhaps even greater than that of health and location data. Authoritarian states know this – it’s been standard practice for spies for centuries. The Stasi’s files were full of details of who had met whom and when, and for how long – this is precisely the kind of data that a contact tracing system has the potential to gather. This is also why we should be hugely wary of establishing systems that enable it to be done easily, remotely and at scale. This isn’t just privacy as some kind of luxury – this is real concern about things that are done in the real world and have been for many, many years, just not with the speed, efficiency and cheapness of installing an app on people’s phones.

Some of this people ‘instinctively’ know – they feel that the intrusions on their privacy are ‘creepy’ – and hence resist. Businesses and government often underestimate how much they care and how much they resist – and how able they are to resist. In my work I have seen this again and again. Perhaps the most relevant here was the dramatic nine day failure that was the Samaritans Radar app, which scanned people’s tweets to detect whether they might be feeling vulnerable and even suicidal, but didn’t understand that even this scanning would be seen as intrusive by the very people it was supposed to protect. They rebelled, and the app was abandoned almost immediately it had started. The NHS’s own ‘care.data’ scheme, far bigger and grander, collapsed for similar reasons – it wanted to suck up data from GP practices into a great big central database, but didn’t get either the legal or the practical consent from enough people to make it work. Resistance was not futile – it was effective.

This resistance seems likely in relation to the contact tracing app too – not least because the resistance grows spectacularly when there is little trust in the people behind a project. And, as we shall see, the government has done almost everything in its power to make people distrust their project.

Magical thinking

The second part of the problem is what can loosely be called ‘magical thinking’. This is another thing that is all too common in what might loosely be called the ‘digital age’. Broadly speaking, it means treating technology as magical, and thinking that you can solve complex, nuanced and multifaceted problems with a wave of a technological wand. It is this kind of magic that Brexiters believed would ‘solve’ the Irish border problems (it won’t) and led anti-porn campaigners to think that ‘age verification’ systems online would stop kids (and often adults) from accessing porn (it won’t).

If you watched Matt Hancock launch the app at the daily Downing Street press conference, you could have seen how this works. He enthused about the app like a child with a new toy – and suggested that it was the key to solving all the problems. Even with the best will in the world, a contact tracing app could only be a very small part of a much bigger operation, and only make a small contribution to solving whatever problems they want it to solve (more of which later). Magical thinking, however, makes it the key, the silver bullet, the magic spell that needs just to be spoken to transform Cinderella into a beautiful princess. It will never be that, and the more it is thought of in those terms the less chance it has of working in any way at all. The magical thinking means that the real work that needs to go on is relegated to the background or eliminated at all, replaced only by the magic of tech.

Here, the app seems to be designed to replace the need for a proper and painstaking testing regime. As it stands, it is based on self-reporting of symptoms, rather than testing. A person self-reports, and then the system alerts anyone who it thinks has been in contact with that person that they might be at risk. Regardless of the technological safeguards, that leaves the system at the mercy of hypochondriacs who will report the slightest cough or headache, thus alerting anyone they’ve been close to, or malicious self-reporters who either just want to cause mischief (scare your friends for a laugh) or who actually want to cause damage – go into a shop run by a rival, then later self-report and get all the workers in the shop worried into self-isolation.

These are just a couple of the possibilities. There are more. Stoics, who have symptoms but don’t take it seriously and don’t report – or people afraid to report because it might get them into trouble with work or friends. Others who don’t even recognise the symptoms. Asymptomatic people who can go around freely infecting people and not get triggered on the system at all. The magical thinking that suggests the app can do everything doesn’t take human nature into account – let alone malicious actors. History shows that whenever a technological system is developed the people who wish to find and exploit flaws in it – or different ways to use it – are ready to take advantage.

Magical thinking also means not thinking anything will go wrong – whether it be the malicious actors already mentioned or some kind of technical flaw that has not been anticipated. It also means that all these problems must be soluble by a little bit of techy cleverness, because the techies are so clever. Of course they are clever – but there are many problems that tech alone can’t solve

The issue of trust

One of those is trust. Tech can’t make people trust you – indeed, many people are distinctly distrustful of technology. The NHS generates trust, and those behind the app may well be assuming that they can ride on the coattails of that trust – but that itself may be wishful thinking, because they have done almost none of the things that generate real trust – and the app depends hugely on trust, because without it people won’t download and won’t use the app.

How can they generate that trust? The first point, and perhaps the hardest, is to be trustworthy. The NHS generates trust but politicians do the opposite. These particular politicians have been demonstrably and dramatically untrustworthy, noted for their lies – Boris Johnson having been sacked from more than one job for having lied. Further, their tech people have a particularly dishonourable record – Dominic Cummings is hardly seen as a paragon of virtue even by his own side, whilst the social media manipulative tactics of the leave campaign were remarkable for their effectiveness and their dishonesty.

In those circumstances, that means you have to work hard to generate trust. There are a few keys here. The first is to distance yourself from the least trustworthy people – the vote leave campaigners should not have been let near this with a barge pole, for example. The second is to follow systems and procedures in an exemplary way, building in checks and balances at all times, and being as transparent as possible.

Here, they’ve done the opposite. It has been almost impossible to find out what was going to until the programme was actually already in pilot stage. Parliament – through its committee system – was not given oversight until the pilot was already under way, and the report of the Human Rights Committee was deeply critical. There appears to have been no Data Protection Impact Assessment done in advance of the pilot – which is almost certainly in breach of the GDPR.

Further, it is still not really clear what the purpose of the project is – and this is also something crucial for the generation of trust. We need to know precisely what the aims are – and how they will be measured, so that it is possible to ascertain whether it is a success or not. We need to know the duration, what happens on completion – to the project, to the data gathered and to the data derived from the data gathered. We need to know how the project will deal with the many, many problems that have already been discussed – and we needed to know that before the project went into its pilot stage.

Being presented with a ‘fait accompli’ and being told to accept it is one way to reduce trust, not to gain it. All these processes need to take place whilst there is still a chance to change the project, and change is significantly – because all the signs are that a significant change will be needed. Currently it seems unlikely that the app will do anything very useful, and it will have significant and damaging side effects.

Misunderstanding Privacy – part 2

…which brings us back to privacy. One of the most common misunderstandings of privacy is the idea that it’s about hiding something away – hence the facetious and false ‘if you’ve got nothing to hide you’ve got nothing to fear’ argument that is made all the time. In practice, privacy is complex and nuanced and more about controlling – or at least influencing – what kind of information about you is made available to whom.

This last part is the key. Privacy is relational. You need privacy from someone or something else, and you need it in different ways. Privacy scholars are often asked ‘who do you worry about most, governments or corporations?’ Are you more worried about Facebook or GCHQ. It’s a bit of a false question – because you should be (and probably are) worried about them in different ways, just as you’re worried about privacy from your boss, your parents, your kids, your friends in different ways. You might tell your doctor the most intimate details about your health, but you probably wouldn’t tell your boss or a bloke you meet in the pub.

With the coronavirus contact tracing app, this is also the key. Who gets access to our data, who gets to know about our health, our location, our movements and our contacts? If we know this information is going to be kept properly confidential, we might be more willing to share it. Do we trust our doctors to keep it confidential? Probably. Would we trust the politicians to keep it confidential? Far less likely. How can we be sure who will get access to it?

Without getting into too much technical detail, this is where the key current argument is over the app. When people talk about a centralised system, they mean that the data (or rather some of the data) is uploaded to a central server when you report symptoms. A decentralised system does not do that – the data is only communicated between phones, and doesn’t get stored in a central database. This is much more privacy-friendly, but does not build up a big central database for later use and analysis.

This is why privacy people much prefer the idea of a decentralised system – because, amongst other things, it keeps the data out of the hands of people that we cannot and should not trust. Out of the hands of the people we need privacy from.

The government does not seem to see this. They’re keen to stress how well the data is protected in ‘security’ terms – protected from hackers and so forth – without realising (or perhaps admitting) that the people we really want privacy from, the people who present the biggest risk to the users, are the government themselves. We don’t trust this government – and we should not really trust any government, but build in safeguards and protections from those governments, and remember that what we build now will be available not just to this government but to successors, which may be even worse, however difficult that might be to imagine.

Ways forward?

Where do we go from here? It seems likely that the government will try to push on regardless, and present whatever happens as a great success. That should be fought against, tooth and nail. They can and should be challenged and pushed on every point – legal, technical, practical, and trust-related. That way they may be willing to move to a more privacy-friendly solution. They do exist, and it’s not too late to change.

Back in 2015, Andrew Parker, the head of MI5,

Back in 2015, Andrew Parker, the head of MI5,  Scene 1:

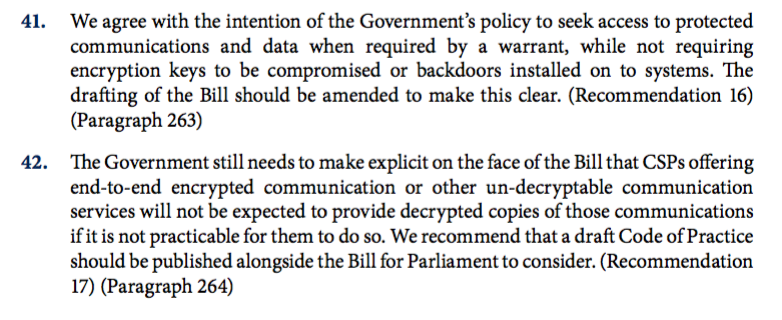

Scene 1: